Insights 4: Yoodli Unstealthed, Large Language Models, Task Centric AI

Yoodli's

coming out of stealth and TheSequence's profiling ofWhyLabs.ai

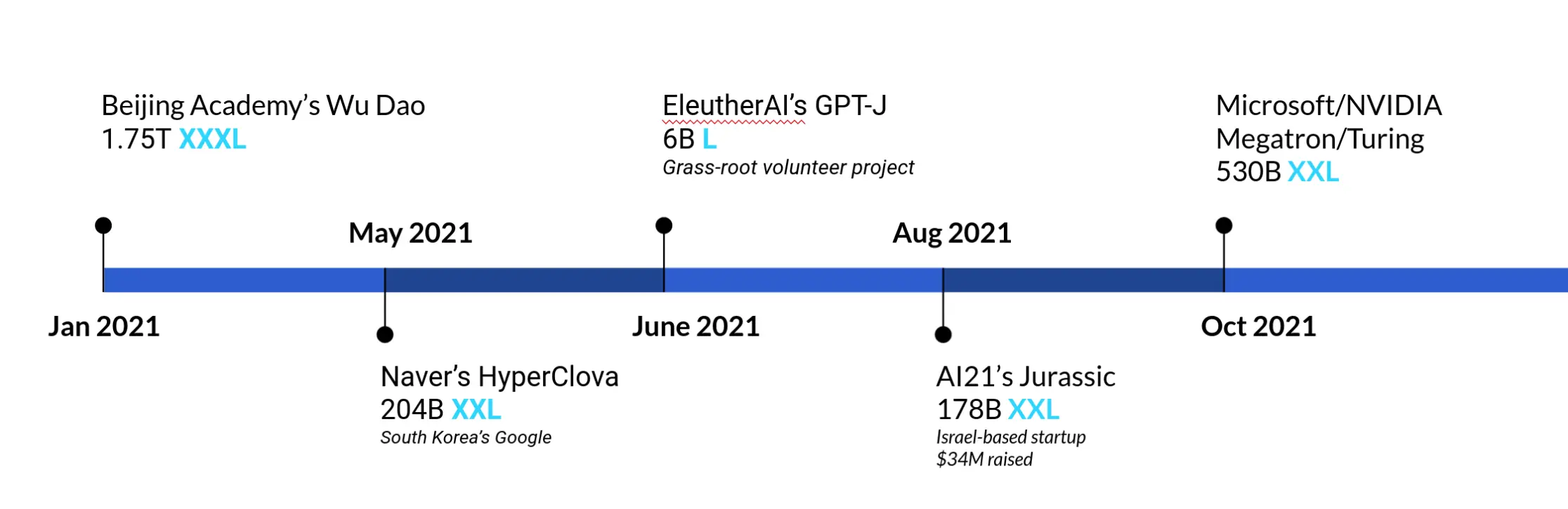

. We will give you a scoop on these two cool companies (insider secret: they are both hiring for multiple roles!). We will also pick out two tidbits from the annual State of AI report that are interesting to us. The first one is the rise of AI-first, full-stack drug discovery and development companies. The second is the invasion of extremely large language models that open the door for both exciting opportunities as well as harmful misuse we should be aware about and actively work on to address. We ponder whether the future of AI will continue to be data-centric per Andrew Ng, or would there be scenarios where we rely less on (large amount of) data given the learning efficiency of these large models.Semantic Scholar

is turning 6. We launched S2 (our internal nickname for Semantic Scholar) on November 2, 2015. Year by year, the product grew better with expanded coverage and unique features. I rely heavily on the folder-centric personal library feature to organize my to-read lists and was delighted to learn earlier this month that S2 now recommends papers directly based on the papers in a given folder. My most favorite S2 feature is however hands downSemantic Reader

, currently in beta. Reading research papers feels 10x better than with traditional PDF viewers - give it a try! The speed at which AI innovation moves from an arxiv upload to production has never been this lightning fast. Startup CTOs/CSOs need to stay on top of what's happening in AI/ML research, and my "unbiased" :) advice is that S2 gives you the best tool to do just that. Happy birthday, Semantic Scholar!AI2 Incubator Companies

AI-first, full-stack drug discovery and development

Exscientia

Recursion Pharmaceuticals

Large Language Models

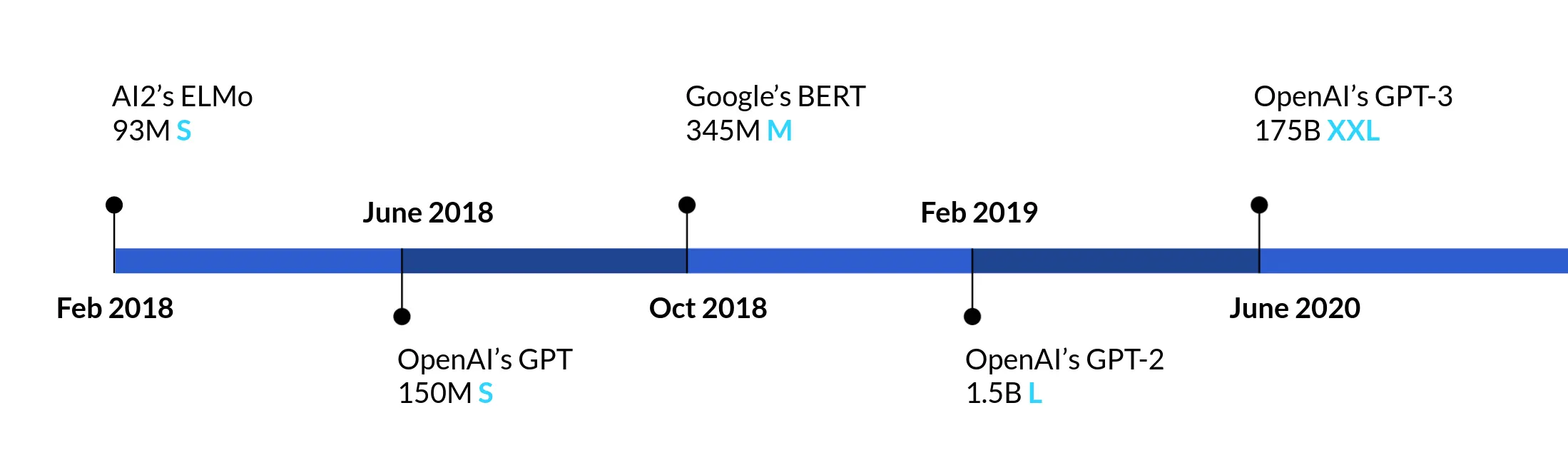

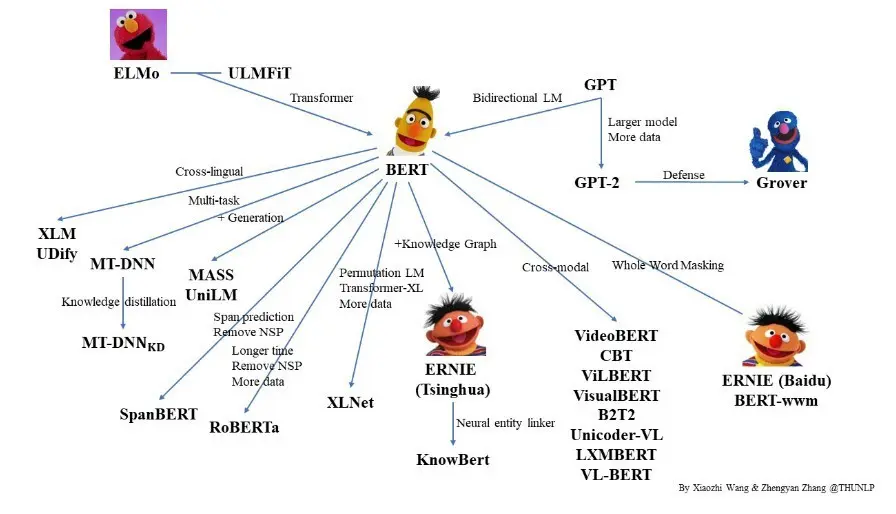

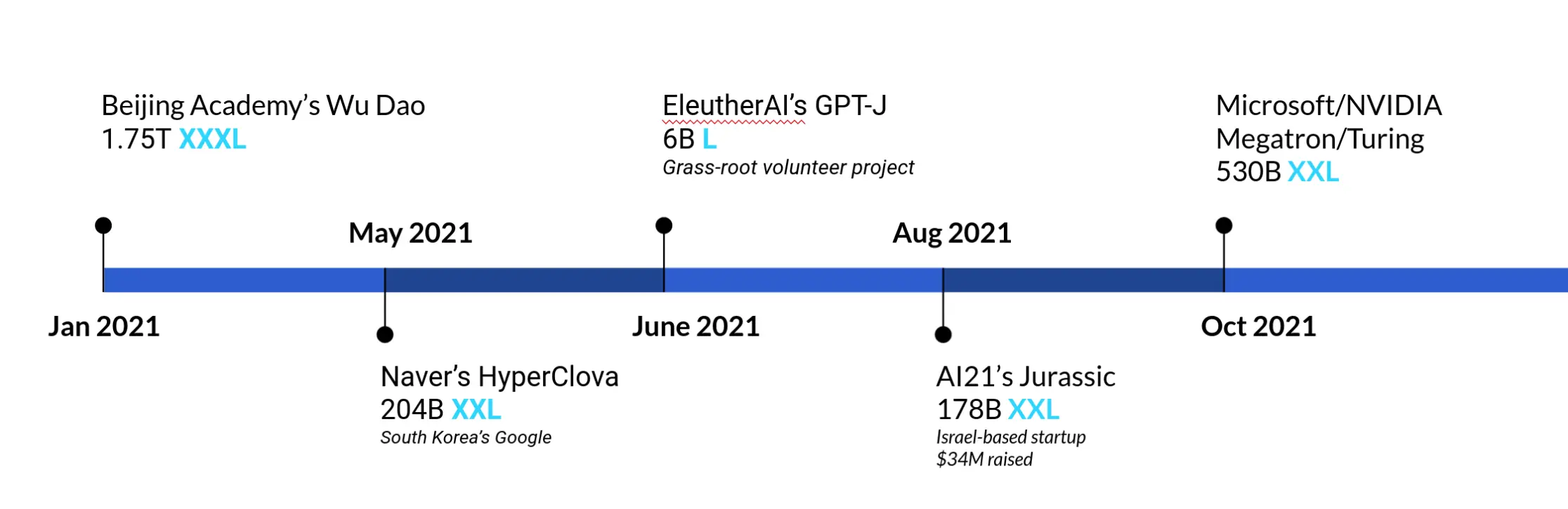

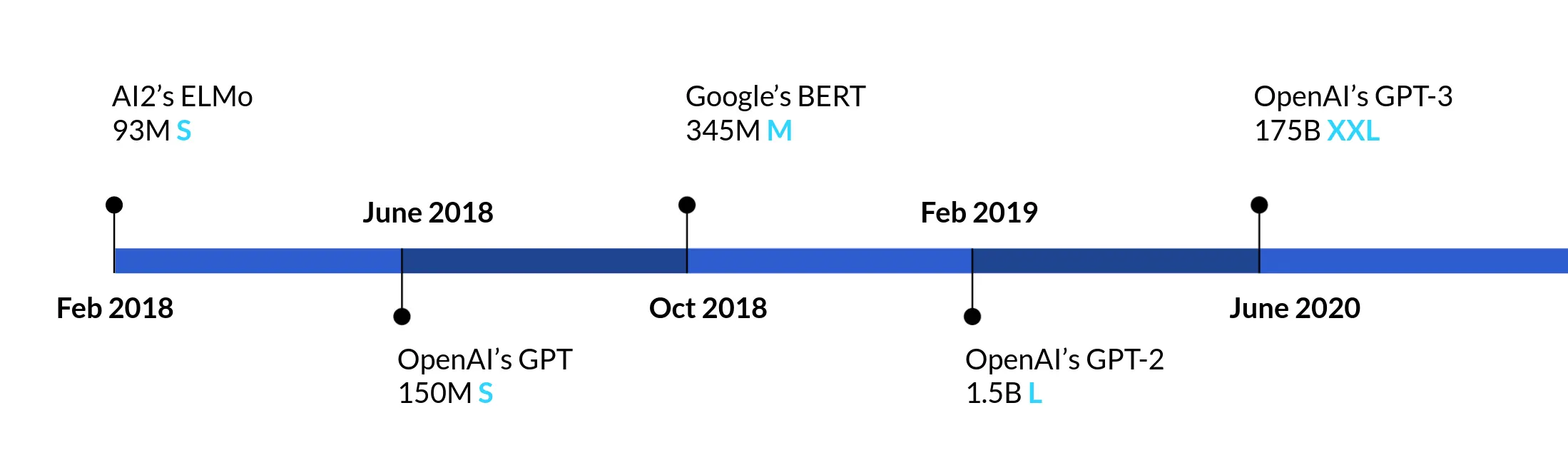

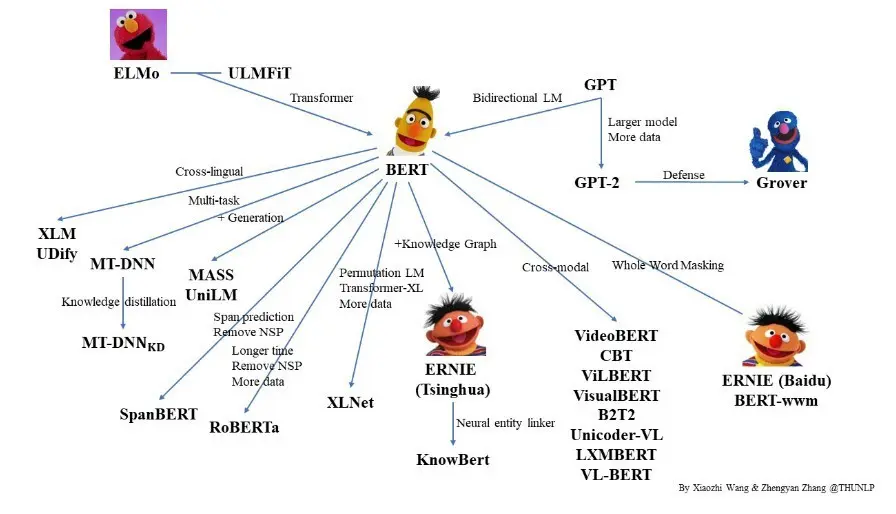

The History of LLMs since ELMo

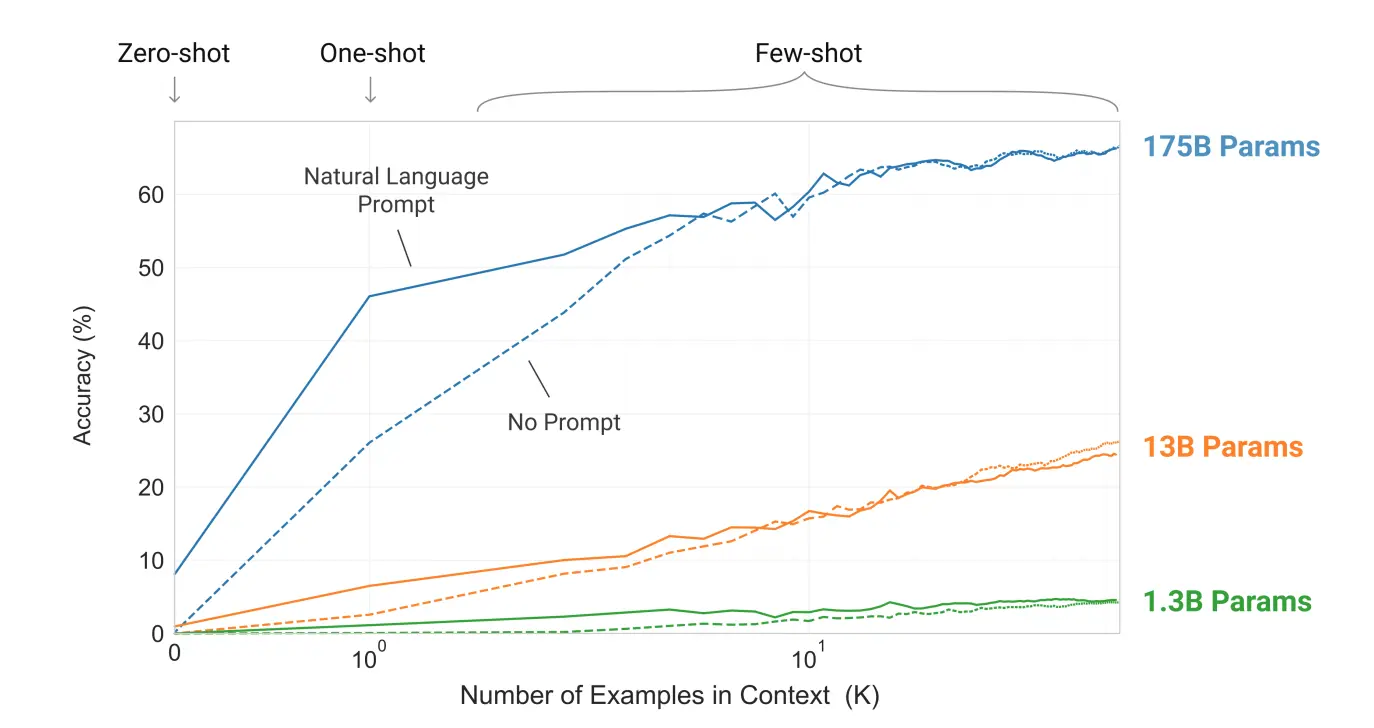

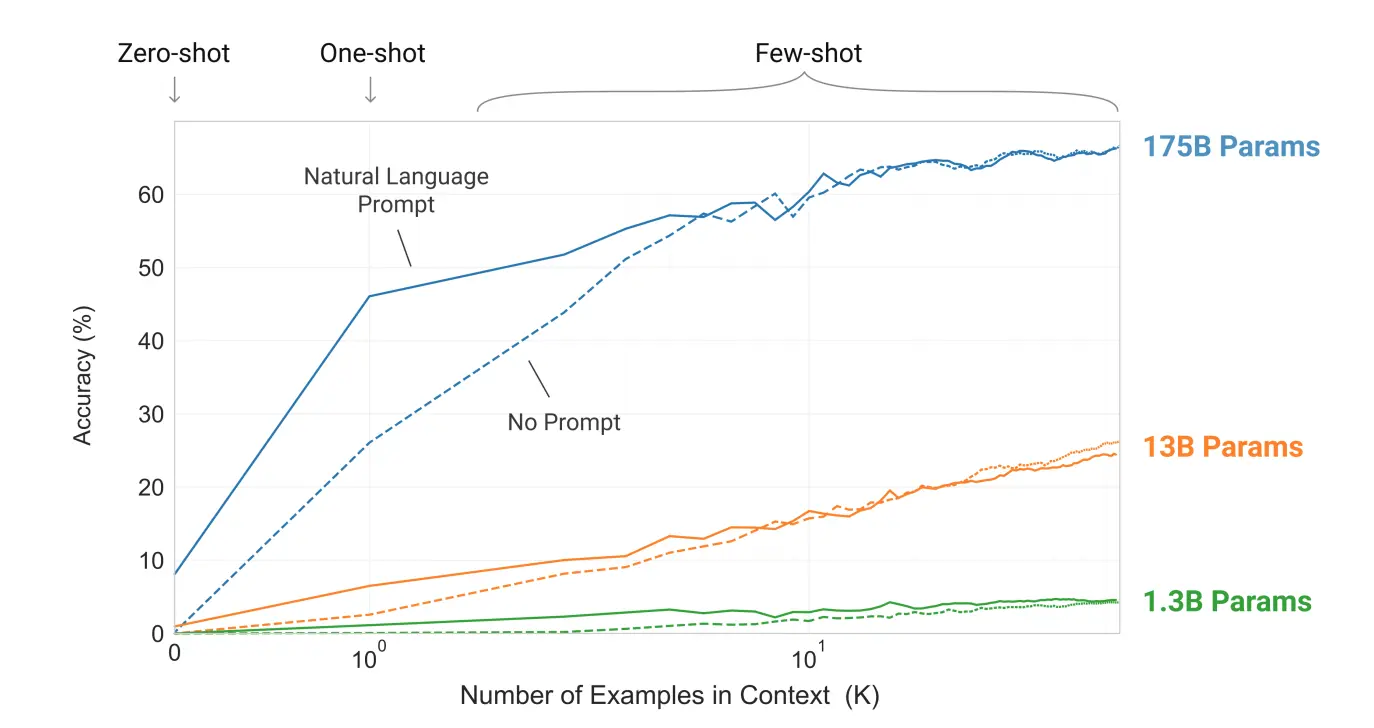

LLM's New-Found Power: Learning Efficiency

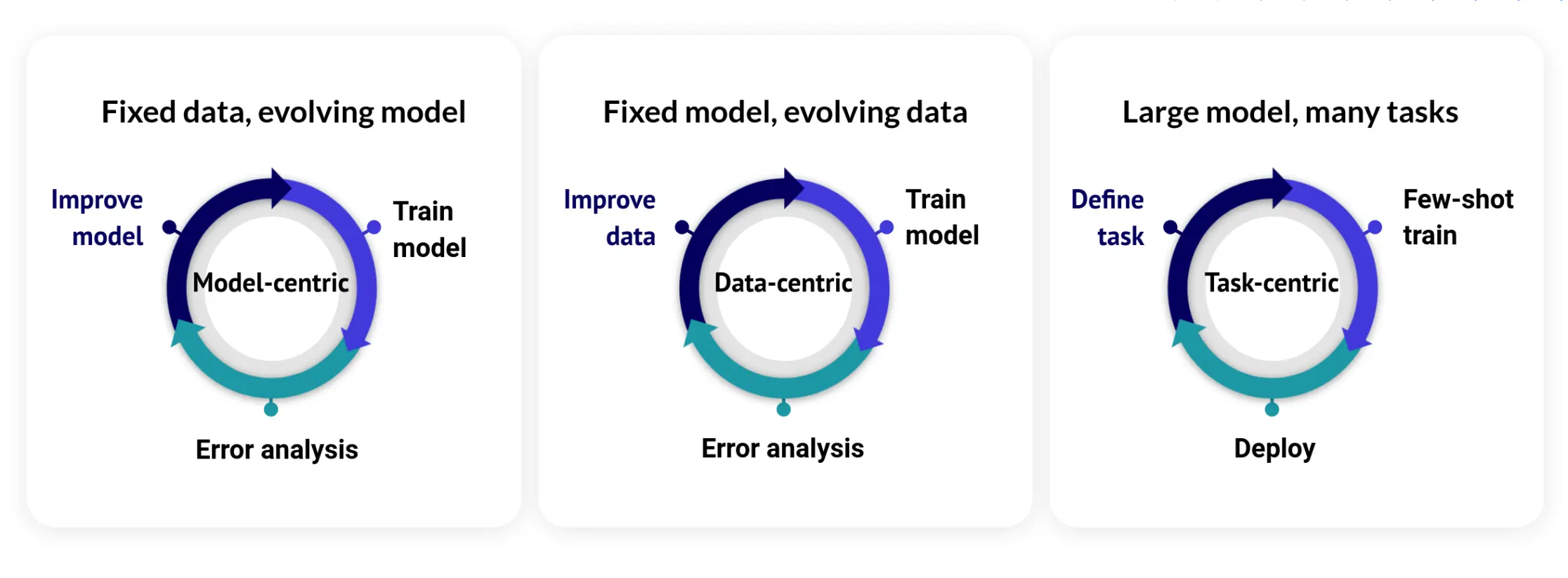

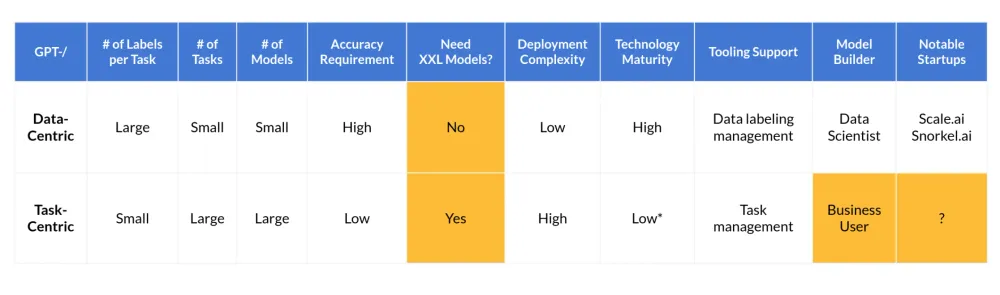

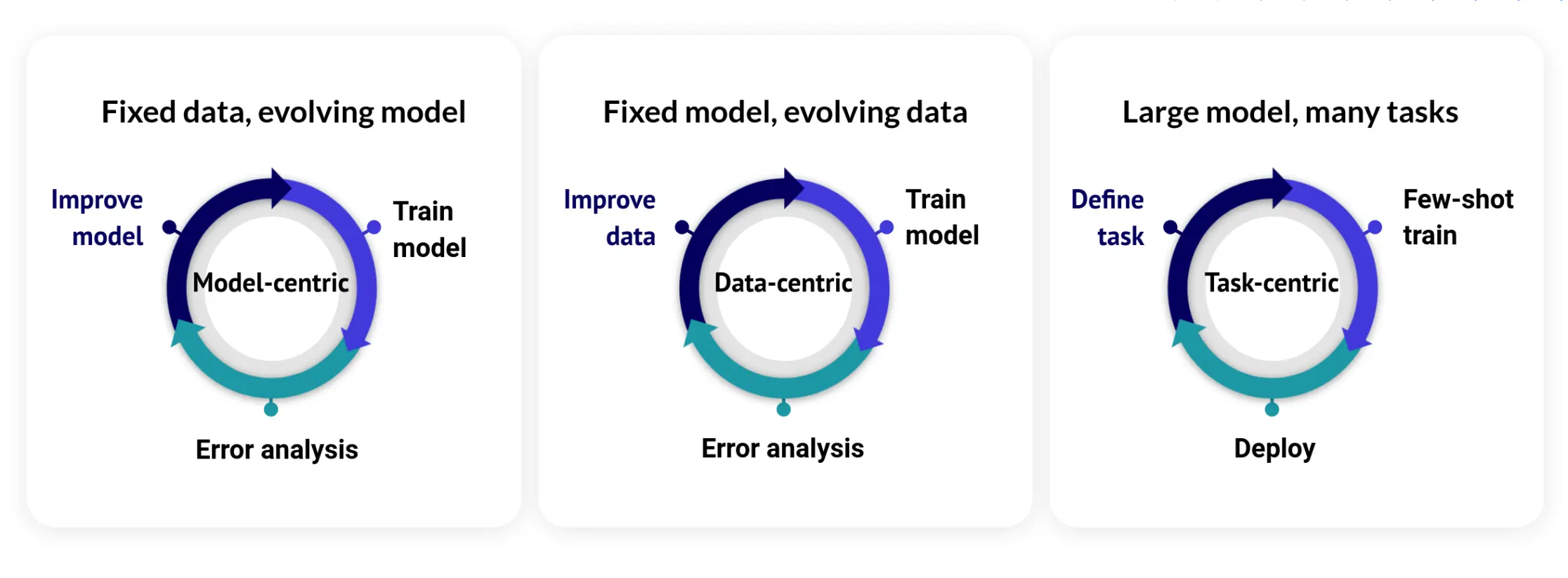

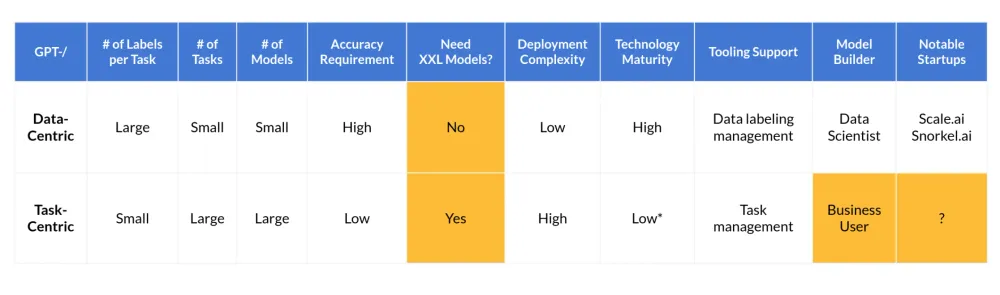

Task-Centric AI

data-centric AI

. The TLDR of data-centric AI, for me personally, is GIGO, or garbage-in garbage out: we should focus on minimizing the garbage-ness of the data we feed AI.task-centric

instead of data-centric.

- What sort of no-code AI problems exist that are a) painful for lots of customers and b) can only be solved with LLMs?

- How can LLMs be deployed cost effectively? GPT-3 is rather spendy if used via OpenAI's API with any meaningful traffic. Ah yes, there's also the small inconvenience of feeding your own Cookie Monster lots of GPUs. AI21 labs did it, so can the next well-funded startup (bootstrap startups eschewing VC path should look elsewhere).

AI Startups

- Mage, developing an artificial intelligence tool for product developers to build and integrate AI into apps, brought in $6.3 million in seed funding led by Gradient Ventures.

- Copy.ai (powered by GPT-3) raised $11M series A.

- Weights & Biases raises $135M series C.

- Domino Data Lab raised $100M series F.

- Immunai raised $215M series B. Holy guacamole!

→

Insights 4: Yoodli Unstealthed, Large Language Models, Task Centric AI

Yoodli's

coming out of stealth and TheSequence's profiling ofWhyLabs.ai

. We will give you a scoop on these two cool companies (insider secret: they are both hiring for multiple roles!). We will also pick out two tidbits from the annual State of AI report that are interesting to us. The first one is the rise of AI-first, full-stack drug discovery and development companies. The second is the invasion of extremely large language models that open the door for both exciting opportunities as well as harmful misuse we should be aware about and actively work on to address. We ponder whether the future of AI will continue to be data-centric per Andrew Ng, or would there be scenarios where we rely less on (large amount of) data given the learning efficiency of these large models.Semantic Scholar

is turning 6. We launched S2 (our internal nickname for Semantic Scholar) on November 2, 2015. Year by year, the product grew better with expanded coverage and unique features. I rely heavily on the folder-centric personal library feature to organize my to-read lists and was delighted to learn earlier this month that S2 now recommends papers directly based on the papers in a given folder. My most favorite S2 feature is however hands downSemantic Reader

, currently in beta. Reading research papers feels 10x better than with traditional PDF viewers - give it a try! The speed at which AI innovation moves from an arxiv upload to production has never been this lightning fast. Startup CTOs/CSOs need to stay on top of what's happening in AI/ML research, and my "unbiased" :) advice is that S2 gives you the best tool to do just that. Happy birthday, Semantic Scholar!AI2 Incubator Companies

AI-first, full-stack drug discovery and development

Exscientia

Recursion Pharmaceuticals

Large Language Models

The History of LLMs since ELMo

LLM's New-Found Power: Learning Efficiency

Task-Centric AI

data-centric AI

. The TLDR of data-centric AI, for me personally, is GIGO, or garbage-in garbage out: we should focus on minimizing the garbage-ness of the data we feed AI.task-centric

instead of data-centric.

- What sort of no-code AI problems exist that are a) painful for lots of customers and b) can only be solved with LLMs?

- How can LLMs be deployed cost effectively? GPT-3 is rather spendy if used via OpenAI's API with any meaningful traffic. Ah yes, there's also the small inconvenience of feeding your own Cookie Monster lots of GPUs. AI21 labs did it, so can the next well-funded startup (bootstrap startups eschewing VC path should look elsewhere).

AI Startups

- Mage, developing an artificial intelligence tool for product developers to build and integrate AI into apps, brought in $6.3 million in seed funding led by Gradient Ventures.

- Copy.ai (powered by GPT-3) raised $11M series A.

- Weights & Biases raises $135M series C.

- Domino Data Lab raised $100M series F.

- Immunai raised $215M series B. Holy guacamole!

→

Join our newsletter

→

Join our newsletter

→

Join our newsletter

→