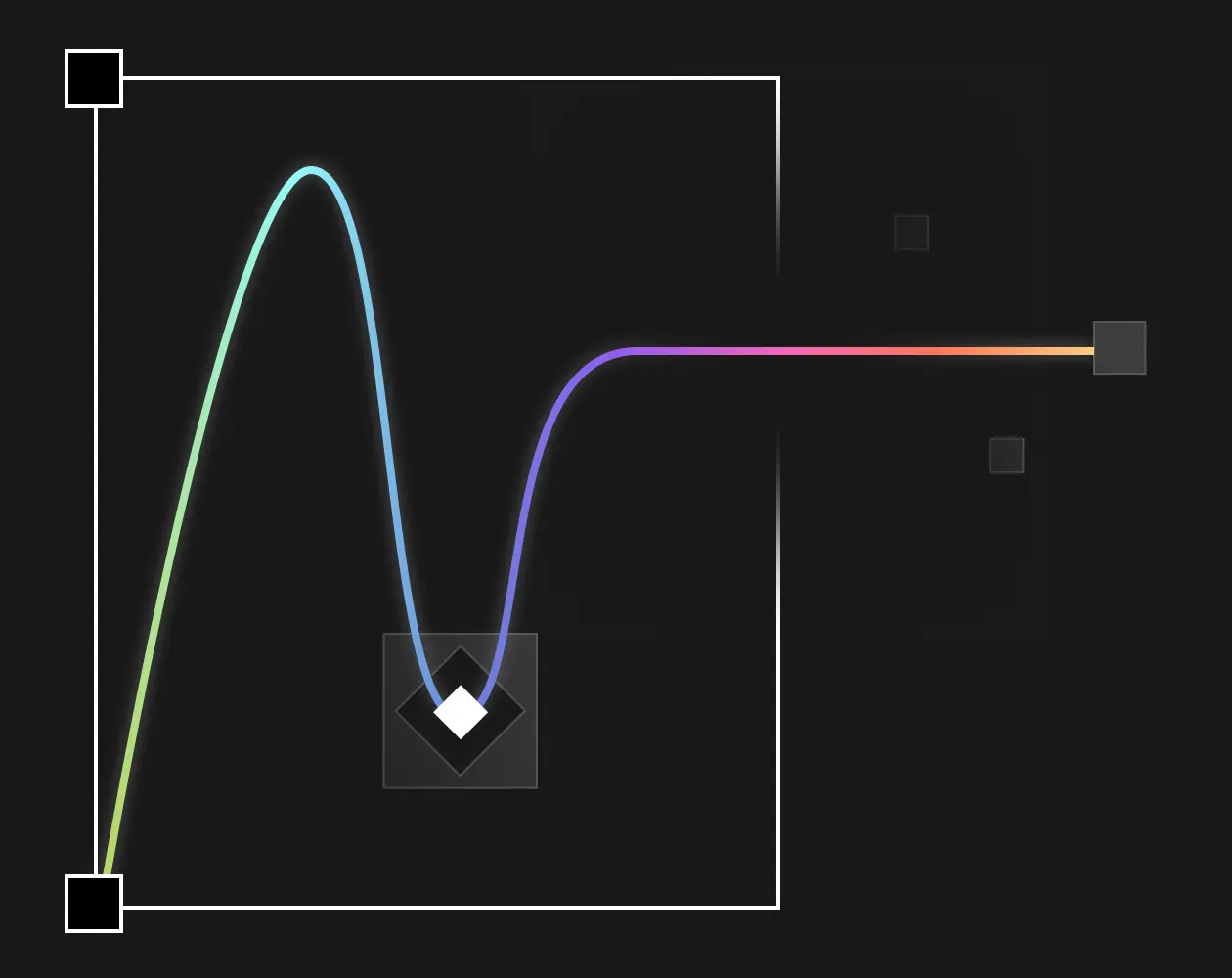

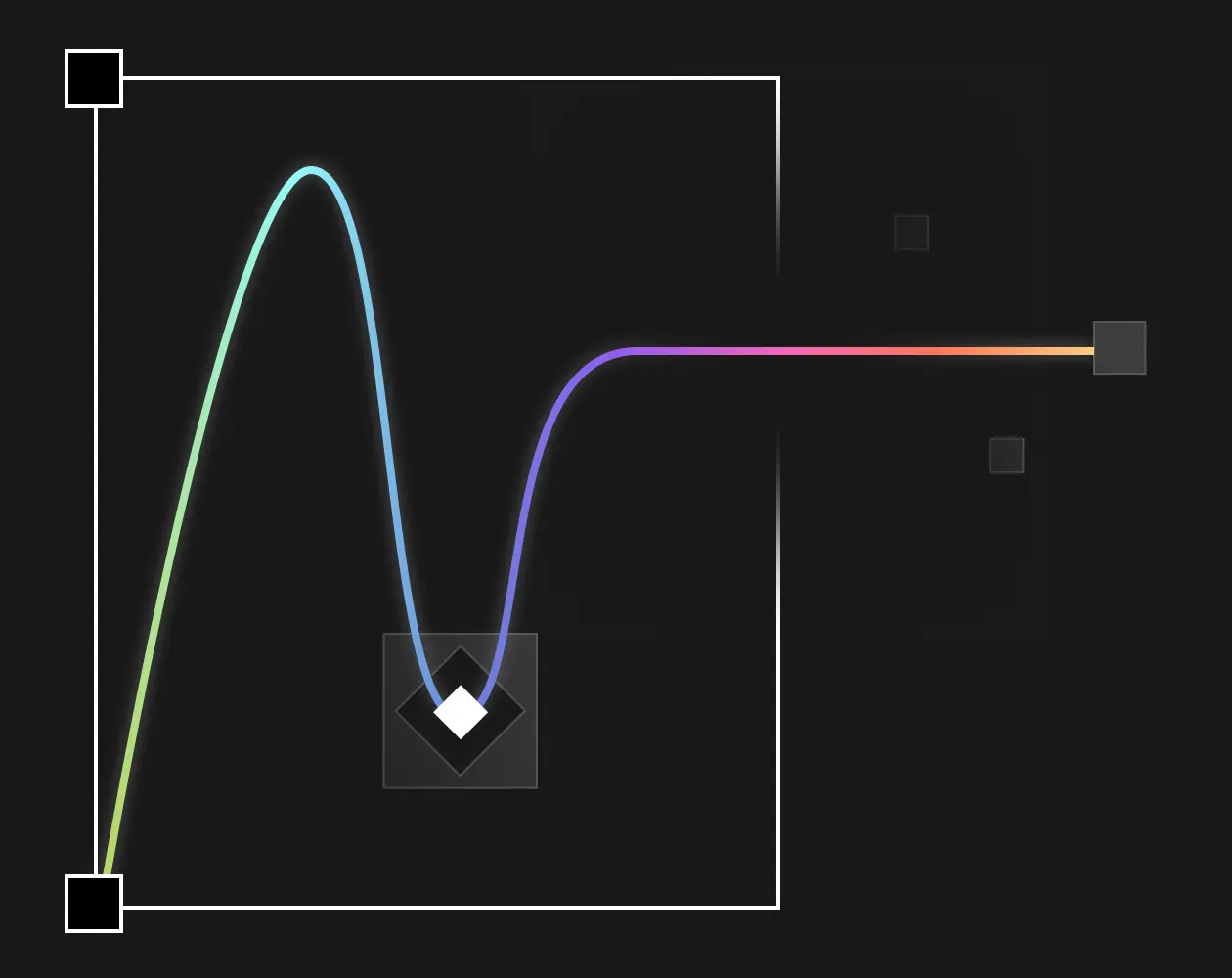

Insight 13: Trough of Disillusionment

This 13th edition of the AI2 Incubator Insight newsletter arrives as we transition from an eventful 2023 into what promises to be an equally dynamic 2024. We discuss a significant limitation of current AI systems: their inability to effectively learn from experiences. This shortcoming suggests that 2024 might be earmarked as a 'trough of disillusionment' period, as we grapple with these challenges without immediate solutions on the horizon. Nevertheless, we continue to see opportunities in generative AI for software and for copilot/assistant use cases. This year will also start a trend of AI development democratization: a broader spectrum of founders can access the necessary infrastructure to advance the frontiers of AI technology. Towards this end, the AI2 Incubator is in advanced discussions to partner with companies that will help pre-seed founders access precious AI computing resources. Let’s dive in!

LLMs Today Lack A Growth Mindset

In her 2006 book titled “Mindset”, Carol Dweck discusses the differences between people with a fixed mindset versus those with a growth mindset. The fixed mindset crowd’s abilities are fixed, whereas the growth mindset folks continuously seek to learn from experiences and failures to improve their abilities. The current crop of LLM-powered products largely lack the ability to learn from their mistakes (aka hallucination). Existing LLMOps solutions typically offer a limited ability to observe (e.g. what chains of prompts result in a mistake) or evaluate using crude metrics. Techniques such as retrieval augmented generation (RAG) and vector databases are useful stopgap solutions to improve accuracy, but they contribute no progress towards making LLMs learn. In this sense, LLM systems are not learning systems; they are what-I-hallucinate-is-what-you-get!

What does a system with a growth mindset look like? Consider a modern search engine such as Google or Bing. When a searcher clicks on a link that is not the top-ranked link, that click behavior is recorded and used to update the search engine’s ranker so that the clicked link will likely be ranked higher in the future. Learning from billions of clicks, search engines keep improving over time as searchers use them. They learn from their mistakes, as indicated by searcher behaviors–automatically, continuously, and at a massive scale. Following this analogy, the current LLM systems are like the Alta Vista and Infoseek of the Nineties, relying on prompt tuning, chunk sizing, and embeddings just like those first-generation search engines relying on stemming, TF-IDF, hard-coded synonym lists, etc. to create fixed rankers that lack a growth mindset.

Satya Nadella used Carol Dweck’s book as an inspiration to change Microsoft’s culture from hard-charging know-it-all to collaborative learn-it-all, 10X the company’s market cap along the way. Today’s LLMs sometimes show traces of that confidently wrong, know-it-all behavior (e.g. in

insisting that the square root of 4 is an irrational number). GenAI startups can yield big returns if LLMs can be guided to learn-it-all. Unlike organizations and people that can change with the right leadership and coaching, LLMs need a breakthrough to become learn-it-all.

In neural network literature, back-propagation is the central learning algorithm that allows a neural network to propagate errors (or losses in technical jargon) backward to weights across different network layers. This weight update gradually nudges the networks to reduce errors and learn the correct behavior. What is currently missing is a way for LLMs to

back-propagate from hallucination (BPFH). We don’t see such a solution in the near term. We don’t feel the AGI just yet. We believe

BPFH

is a necessary condition and a grand challenge for AGI.

2024: Trough of Disillusionment?

Six months ago, in

Insight #11, we asked the question: Is it a peak of inflated expectation? As 2024 begins, we see signs pointing toward a tough reality check. Our colleagues at Madrona

characterize 2024 as the year where executives expect results from 2023’s prototyping efforts. A large percentage of those prototypes will not make the transition to production due to LLMs’ inability to learn, as described above. Many GenAI startups that raised their rich seeds during the frothy early 2023 will fail to show value to their customers and consequently struggle to raise their A rounds.

In Insight #11, we also shared our bear case for LLMOps startups. In 2024, we believe this sector will be hit hard. The space is noisy and volatile, with large enterprise customers likely pulling back investment given the high failure rates of their 2023 prototypes. Products from heavyweights such as Amazon Bedrock have entered the market, deepening the challenge of customer acquisition. In light of the BPFH grand challenge, it is difficult to see how a prompt management tool (to pick just a popular LLMOps feature as an example) can be the linchpin of an enduring company. To succeed, LLMOps startups need to go deep instead of broad, or even pivot to a vertical solution (see below).

Where are the bright spots? We continue to see opportunities for

copilot/assistant-like products across industries, including software. Software engineering is a massive industry with opportunities for productivity gains across the stack, from design and front-end to devops and back-end. From a technical standpoint, code generation tools benefit from a) a relatively finite and structured domain and b) the ability to verify the generated code with external systems such as compilers and execution environments. In a recent

analysis of generative AI VC trends, PitchBook highlighted code as a standout segment for M&A probability: “Companies in this segment feature high-quality investors, consistent fundraising frequency, and improving headcount”. The AI2 Incubator’s most recent graduate is a stealth startup harnessing AI to level up the chip design and verification process for the semiconductor industry.

Open Source AI and a Level Playing Field

In 2023 we witnessed an explosion of LLMs trained from scratch, starting with the release of Llama by Meta AI. A small but growing developer community emerged around open source AI, creating and sharing tools from data processing and fine tuning to evaluation and quantization. An LLM infrastructure stack is rapidly developing, with companies like Hugging Face, OctoML, Lamini, Together (our startup pick) offering tools for cutting edge AI development. The GPU shortage continues, but will improve towards the end of 2024. Alternative AI compute stacks from AMD (MI300X), Amazon (Trainium), Intel (Gaudi), etc. are eager to win over the hearts and minds of AI developers.

This is fantastic news for founders who want to go beyond the confines of packaged APIs from OpenAI, Google, Azure, etc. Today, there are two camps of startup founders: those who have GPUs and those who don’t. A handful of founders with DeepMind/Meta AI pedigrees could raise 8-9 figures to acquire the A100s and H100s. The rest are forced to innovate on top of existing APIs. We believe that 2024 will be more equitable. Innovation doesn’t always come from the privileged. A small group of folks, no matter how talented they are, should not hold exclusive access to resources for innovation. The founders who start a Google of the future may be just a couple of unproven graduate students coming up with a BPFH breakthrough.

At the AI2 Incubator we are working with several cloud providers to secure AI computing resources for our founders at the pre-seed stage. Stay tuned for that announcement!

Advice for Founders

In this section we will use a question and answer format to share a few pieces of advice for founders building GenAI startups. The audience is primarily pre-seed and seed-stage founders.

What LLMs should I use?

OpenAI’s GPT-4x as default, with GPT-3.5 for higher-volume, less critical use cases where accuracy can be traded off for speed and/or cost. Despite tremendous progress by competitors such as Google’s Gemini and Mistral, OpenAI is still ahead by some distance. Our perhaps contrarian take is that this gap is not narrowing any time soon. As technology advisors to founders, we look beyond benchmark numbers (which can be and have been gamed) to try to see the holistic picture. Will your carefully tuned prompts produce the same output when you switch away from OpenAI? Do you have the necessary AI infra chops to self-host an LLM with comparable performance and cost? Stay focused on the user experience that matters to your customer. Consider GPT APIs offered by Azure: They are identical models that may fit your enterprise-grade use cases better.

How do I fine tune?

Despite advances such as low-rank adaptation (LoRA), fine tuning LLMs is still extremely challenging. Make sure you exhaust the potential of in-context learning, following OpenAI’s

guide and techniques such as

promptbase. Fine tune as a last resort and expect many attempts before succeeding.

What options do I have to reduce hallucination?

How do I recruit an AI expert for the founding team?

Look for non-AI experts, generalist engineers with a growth mindset. They would relish the opportunity to dive into cutting edge AI to solve an important business problem. Help them overcome imposter syndromes, if they exist. They may even help you make inroad toward BPFH.

How about the true AI experts? If you can persuade Karpathy to join, go for it. Realistically, proven LLM experts are in extreme short supply just like the GPUs they control, so it’s best to bet and invest in unproven talent that a) have proved themselves as outliers in other technical areas such as coding, math, physics, etc. and b) are curious about AI. Prior experience in previous generation AI/ML is helpful but not required.

2024 Prediction: VoiceGPTs

We wrap up Insight #13 with our prediction for 2024. Similar to many other 2024 predictions, we anticipate multimodal models to take center stage. We are particularly excited about models that combine text and speech modalities, enabling seamless end-to-end conversations that are voice-based. This is in contrast to the current pipelined approach of sandwiching an LLM with a pair of speech-to-text and text-to-speech models that results in highly stilted, walkie-talkie-like experience. Multimodal text and speech models, which we refer to as VoiceGPTs, will elevate the popular ChatGPT experience beyond the confines of the keyboard. Imagine having a natural conversation about any topic with a VoiceGPT on your Alexa, Siri, or Home device. This is a highly non-trivial technical challenge. We will only see a preview of such technology in 2024.

Stay up to date with the latest A.I. and deep tech reports.